What is Data Center Redundancy? N, N+1, 2N, 2N+1

Organizations are continuing to embrace digital transformation to support operations and drive business growth. As technology increasingly integrates itself into every aspect of business operations, the threat and potential impact of downtime grows exponentially. To support this need for high availability, businesses are relying on third-party data centers to deliver resilient environments that can withstand service disruptions to ensure uptime and business continuity.

The causes of downtime run the gambit, from routine maintenance and hardware failures to natural disasters, cyberattacks and simple human error. Regardless of how downtime occurs, the results are the same: you cannot access your critical data and applications, operate your business and service your customers. This affects your bottom line by interrupting revenue streams, stalling productivity, downgrading the customer experience and damaging your reputation.

The reality is, downtime can take a harrowing toll on your business. According to Gartner, the average cost of downtime is $5,600 per minute.* Lost dollars can accumulate quickly during a prolonged outage. The ITIC’s 11th Annual Hourly Cost of Downtime survey reported that 40% of enterprises said the cost of an hour of downtime can range from $1 million to more than $5 million—and that does not include legal fees, fines or penalties. The survey also noted that a catastrophic outage that interrupts a major business transaction or occurs during peak business hours can exceed millions of dollars per minute.

With the Uptime Institute reporting that more than 75% of businesses have experienced an outage that caused substantial financial and brand damage over the last three years, downtime is a real and direct concern.

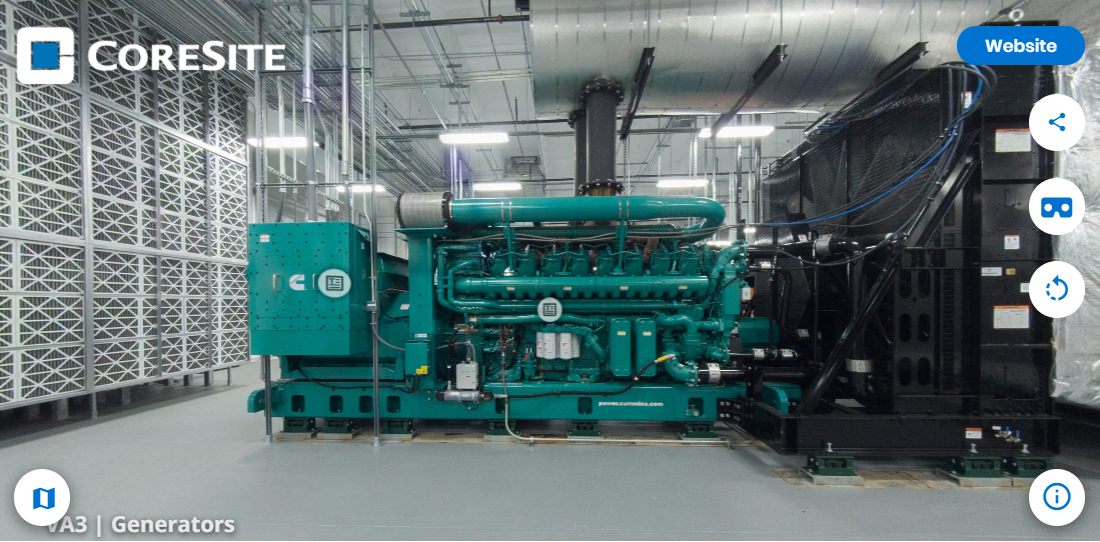

While downtime can impact every business, every organization has a different risk tolerance. A small business that does not operate 24/7 may be able to handle scheduled downtime during non-business hours for maintenance on critical hardware, such as uninterruptable power supply (UPS) systems, HVAC units or backup generators. However, an unplanned outage that cannot be quickly restored can be financially devastating. An enterprise with an international presence or around-the-clock operations cannot shut down even for planned maintenance and must rely on infrastructure redundancy within a datacenter for concurrent maintainability.

If your business relies on a third-party data center to support your critical servers, you need to understand the redundancy model the data center utilizes to ensure its architecture provides the protections your business needs to remain online.

What is a redundant data center?

Data centers address downtime by building redundancy into their infrastructure. A redundant data center architecture duplicates critical components—such as UPS systems, cooling systems and backup generators—to ensure data center operations can continue even if a component fails. While increased levels of redundancy better circumvent downtime, a fully redundant design is expensive, and not in every business’ budget.

The good news is that redundancy can be achieved in a variety of configurations, each with a progressive level of security to meet specific needs around performance, availability and cost. To find the architecture that meets your business needs, you must first understand your risk tolerance and how it aligns with the various data center redundancy models.

What are the data center redundancy levels?

Data center redundancy is not a one-size-fits-all endeavor. Building a redundant architecture is increasingly expensive as more components are added. To gauge the right configuration for your organization, it is important to recognize the risks and capabilities of the various architectures, including N, N+1, N+2, 2N and 2N+1.

Also, keep in mind that a given data center can operate with multiple redundancy models. A UPS can be 2N while the cooling system is N+1. The cooling system can be N+1 but still have a single point of failure in the piping. All power whips, a critical part of the power flow process, have to be 2N in order to create upstream redundancies; including a single power whip would defeat the purpose of having N+1 or 2N UPS, as it is a single point of failure.

With all of these redundant architectures, the use of an automatic transfer power design further minimizes interruptions. An automatic transfer design ensures that when one power source goes offline, capacity is instantly diverted to the designated backup unit. An automatic transfer power design can be accomplished by installing an Automatic Transfer Switch (ATS) or logic controlled switchgear. This avoids downtime that may occur waiting for a technician to manually switch over to the secondary unit.

Defining N

Before you assess each redundancy model, you must understand N.

N is the minimum capacity needed to power or cool a data center at full IT load. For example, if a data center requires four UPS units to operate at full capacity, N would equal four.

By definition, N does not include any redundancy, making it susceptible to single points of failure. This means that a facility at full capacity with an N architecture cannot tolerate any disruption—whether a hardware failure, scheduled maintenance or an unexpected outage. With an N design, any interruption would leave your business unable to access your applications and data until the issue is resolved.

N+1 Data Center Architecture

N+1 redundancy provides a minimal level of resiliency by adding a single component—a UPS, HVAC system or generator—to the N architecture to support a failure or allow a single machine to be serviced. When one system is offline, the extra component takes over its load. Going back to the previous example, if N equals four UPS units, N+1 provides five.

This configuration follows recognized design standards, which recommend one additional component for every four required to support full capacity. While N+1 introduces some redundancy, it still presents a risk in the event of multiple simultaneous failures. To minimize this risk, some data centers utilize an N+2 redundancy design to provide two extra components. In our example, this would provide six UPS units instead of five.

Because of the simplicity of its architecture, an N+1 design is cheaper and more energy efficient than the other more sophisticated designs.

2N Data Center Architecture

A 2N redundancy model creates a mirror image of the original UPS, cooling system or generator arrangement to provide full fault tolerance. This means if four UPS units are necessary to satisfy capacity requirements, the redundant architecture would include an additional four UPS units, for a total of eight systems. This design also utilizes two independent distribution systems.

This architecture allows the data center operator to take down an entire set of components for maintenance without interrupting normal operations. Further, in the event that the primary architecture fails, the secondary architecture takes over to maintain service. The resiliency of this architecture greatly diminishes the likelihood of downtime.

2N+1 Data Center Architecture

2N+1 delivers the fully fault-tolerant 2N architecture plus an extra component for an added layer of protection. Not only can this architecture withstand multiple component failures, even in a worst-case scenario when the entire primary system goes down, it can sustain N+1 redundancy.

This level of redundancy is generally used by large companies which cannot tolerate even minor service disruptions.

What do data center tiers have to do with redundancy?

Redundancy is unequivocally integral in gauging data center reliability, performance and availability, yet adding additional components to the data center’s essential infrastructure is just one element of delivering this redundancy. The Uptime Institute offers a Tier Classification System that certifies data centers according to four distinct tiers—Tier 1, Tier 2, Tier 3 and Tier 4.

The progressive data center tier certification levels have strict and specific requirements around the capabilities and the minimum level of service a data center certified for that tier provides. While the level of redundant components is certainly a factor, the Uptime Institute also evaluates staff expertise, maintenance protocols and more. These factors combine to deliver the following minimum uptime guarantees**:

- Data Center Tier 1 Uptime: 99. 671% or less than 28.8 hours of downtime per year

- Data Center Tier 2 Uptime: 99.741% or less than 22 hours of downtime per year

- Data Center Tier 3 Uptime: 99. 982% or less than 1.6 hours of downtime per year

- Data Center Tier 4 Uptime: 99. 995% or less than 26.3 minutes of downtime per year

The intensifying capabilities of each tier can provide you with another reference point to help you understand the level of performance a data center can deliver.

What is your risk tolerance?

Choosing the redundant architecture that meets your business requirements can be challenging. Mapping your business needs to an appropriate redundancy model is an essential step in ensuring your data center provider can offer the protections to provide you with an appropriate uptime guarantee while respecting your budget. Finding the right balance between this reliability and cost is key because an ineffective data center redundancy model can pose devastating consequences for your business.

The end game is partnering with a data center provider that can meet your needs and offer guidance, as well as deliver business assurance allowing you to continually service your customers and build your business.

*https://blogs.gartner.com/andrew-lerner/2014/07/16/the-cost-of-downtime/

**https://www.colocationamerica.com/data-center/tier-standards-overview