Inference Zones: How Data Centers Support Real-Time AI

CoreSite is introducing a new concept for artificial intelligence (AI) infrastructure called “Inference Zones,” areas in geographic proximity to the AI models that enable AI applications. In this blog, I will introduce Inference Zones and explain why they provide a critical foundation for the AI revolution.

What Is AI Inferencing?

According to Oracle, “In artificial intelligence, inference is the ability of AI, after much training on curated data sets, to reason and draw conclusions from data it hasn’t seen before. The AI model can reason and make predictions in a way that mimics human abilities.”1 After all the AI training, inferencing is the next step. It’s AI in action, and it’s AI delivering on its value proposition.

Inferencing is about decision-making. You and I make decisions instantaneously, often without perceptively recognizing the process, based upon our experience. Experience is our training model, decisions our inferences.

The Large Language Models (LLMs) that serve as the core technology for many AI applications are designed to function very much like the human brain by analyzing current information against previous knowledge and making judgments that are actionable. For LLMs, that “previous knowledge” is the training data and the “current information” is the real-world data being fed into the application, often in real time.

In production AI applications, responsiveness is imperative. Some even require millisecond response time to ensure effectiveness. Network latency must be minimized. Depending on the AI use case, user experience may be degraded by latency, while in other cases latency can hinder real-time decision-making and even prevent the application from functioning properly.

How Latency Impacts AI Applications

Latency is primarily a factor of distance. Greater physical distance between the application and the LLM inherently means data travel time is longer. Also, keep in mind that data in motion through fiber optic cable needs to be “boosted” (amplified) along the way. These network hops introduce multiple potential points of failure, increase latency and affect performance.

“Geographic distribution plays a significant role in application performance,” according to a post on the AWS Machine Learning Blog. “Model invocation latency can vary considerably depending on whether calls originate from different regions, local machines or different cloud providers. This variation stems from data travel time across networks and geographic distances.”2

Consequently, one goal for AI application distribution should be to optimize the distance between the endpoint and the LLM. This is why Inference Zones matter – it’s essential to know how far from a data center the end points are during AI-powered decision-making.

CoreSite Pioneers AI Inference Zones

Many of the leading LLMs are hosted in the cloud by hyperscalers, such as CoreSite partners Amazon Web Services (AWS), Microsoft Azure and Google Cloud. Just like being adjacent to a cloud availability zone is important for application performance, being in an Inference Zone is essential for latency-sensitive AI applications where the data is distributed across multiple clouds. For optimal performance, AI applications, specifically inferencing apps, should be located adjacent to where the cloud regions – and consequently the LLM-based models – are deployed and where there is a concentration of network onramps to those clouds to provide high-performance, low latency inter-cloud connectivity.

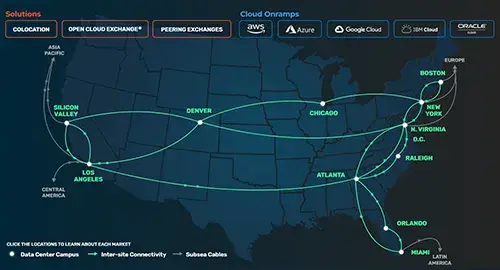

The leading cloud providers supporting LLMs are clustered in major metropolitan areas across the United States, and wherever you find a concentration of cloud regions and onramps, CoreSite is there. Our strategically located colocation campuses are in the heart of key Inference Zones including top tech markets in the U.S. such as Silicon Valley, Chicago and the nation’s capital.

In each of these Inference Zones, CoreSite offers dedicated, high-bandwidth network onramps into every major cloud provider. Deploying an inferencing application in a CoreSite colocation facility provides optimal performance by offering a low latency, high-throughput data pipeline between endpoints and the LLMs. This greatly enhances the user experience and ensures inferencing operations are not impaired by network unpredictability .

In addition, CoreSite colocation campuses feature exceptional interconnection, and the Open Cloud Exchange® (OCX), a network services management platform, makes it easy for enterprises to control access to their service providers. This extensive ecosystem of connectivity empowers an AI application with an expansive reach to the cloud and the edge. CoreSite data centers are optimally placed in the “sweet spot” between the endpoints generating data for the AI applications and the cloud-based LLMs crunching that data and making decisions.

Cost optimization makes CoreSite even more appealing for AI applications. CoreSite customers can lower data center operation costs, instantly turn on and off services to manage spend on AI workloads and data and reduce the data egress fees charged by cloud providers.

The following are just a few examples of industries currently leveraging CoreSite to support their AI-assisted services:

- Autonomous Vehicles: An automotive innovator uses CoreSite as the seamless connection between its driverless vehicles and the LLMs making split-second decisions.

- Music Streaming: One of the top streaming music services uses CoreSite's direct connections to the cloud, training AI on user listening history to understand preferences and accurately recommend the next song on the playlist.

- Social Media: One of the world's leading global social media platforms utilizes CoreSite to enable AI algorithms that analyze user behavior and trends to serve up personalized curated content.

Many leading companies choose CoreSite as a strategic partner to host their critical infrastructure, facilitate access to clouds and enable AI applications to transition from training to inferencing. CoreSite supports a variety of AI uses cases, offering the cloud-adjacent infrastructure, reliable high-bandwidth connections, and industry experience needed to deliver the high performance and low latency that AI applications depend on – inside the data center and throughout Inference Zones.

With fledgling data center providers popping up to ride the AI wave, CoreSite's 20+ years of experience, commitment, operational excellence and proven track record in the data center arena stand out more than ever. AI is not a bandwagon to jump on – it is a technological revolution that is changing the world, and with the introduction of Inference Zones, CoreSite is helping lay the groundwork for an AI-powered future.

Know More

Where data centers are located matters - in terms of latency, business continuity and, as discussed above, inferencing.

Click here or on the map to learn more about CoreSite data centers, data center campuses and data center locations.

References

1. What Is AI Inference?, Jeffrey Erickson, Content Strategist, Oracle, April 2, 2024 (source)

2. Optimizing AI responsiveness: A practical guide to Amazon Bedrock latency-optimized inference, Ishan Singh et al., AWS Machine Learning Blog, January 28, 2025 (source)